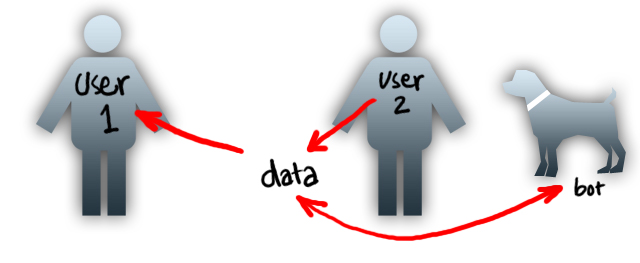

Back in the late 90s most websites were made for the user. And they are so even now. But the definition of the user has changed over time and become complicated. The concept initially was much like a software, “a person comes and uses a website” and has changed over time to “a person comes and accesses information”. That soon brought in the text torrent of “user”, the one’s that are putting the data in. And it soon became a balance and a matter of priority.

This is just a broad overview.

Social networking sites as we see today consider the later to be their primary user. Sites like google, amazon etc consider the former to be their primary user.

The idea has to slowly shift to combine the both, to invite the “accessing” user to “provide” more often, and this can be done only when the “process of providing” becomes a lot easier.

Apart from that, now we have to keep in mind the 3rd kind of user. “The Bots”, or crawlers from various search engines. Yes, a site has to be easier to crawl for information as well, and easier it is to do that, the better chances of it’s visibility on various search rankings.

What’s next?

The move should be to design for the 2 types of users and try and merge them. This would lead to a steady flow of updated information (not just about status messages and tweets but relevant information). The move has already started, and personal computing devices and slowly changing sizes and shape to aid in the process. And designs have to mirror the same sentiments.

Post author

Improve your web-forms and increase conversions

Monday 8 February 2010 | 10:18

http://conversionroom.blogspot.com/2010/02/improve-your-web-forms-and-increase.html

Turns out it’s already happening!